Tool use is essential for enabling robots to perform complex real-world tasks, but learning such skills requires extensive datasets. While teleoperation is widely used, it is slow, delay-sensitive, and poorly suited for dynamic tasks. In contrast, human videos provide a natural way for data collection without specialized hardware, though they pose challenges on robot learning due to viewpoint variations and embodiment gaps. To address these challenges, we propose a framework that transfers tool-use knowledge from humans to robots. To improve the policy's robustness to viewpoint variations, we use two RGB cameras to reconstruct 3D scenes and apply Gaussian splatting for novel view synthesis. We reduce the embodiment gap using segmented observations and tool-centric, task-space actions to achieve embodiment-invariant visuomotor policy learning. Our method achieves a 71% improvement in task success and a 77% reduction in data collection time compared to diffusion policies trained on teleoperation with equivalent time budgets. Our method also reduces data collection time by 41% compared with the state-of-the-art data collection interface.

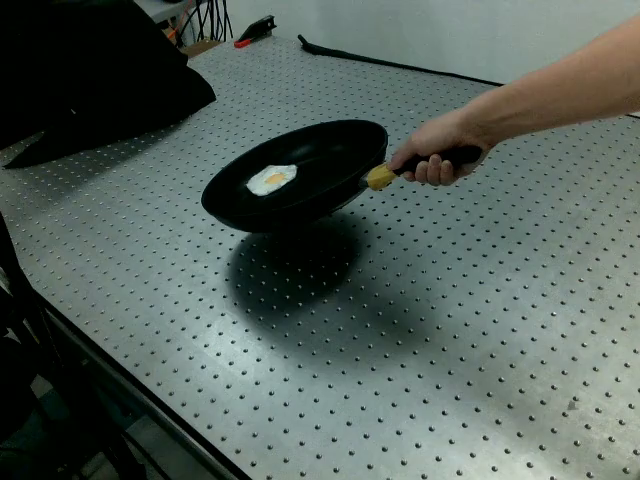

Pan flipping involves dynamically flipping various objects, such as eggs, burger buns, and meat patties. This demonstrates precision, agility, and the ability to adapt to different challenges in motion control. Our framework enables robots to learn highly dynamic movements.

Our framework is robust to various perturbations, including a moving camera, moving base, and human perturbations.

This section highlights the precision capabilities of our framework in tasks that require high accuracy, such as hammering a nail and wine balancing.

This example demonstrates a 3D reconstruction generated using MASt3R. The model is created from the two input images shown below. You can interact with the 3D model by rotating and zooming in/out.

Human manipulation is inherently more natural and intuitive. Consequently, our policy generates significantly faster and smoother action trajectories compared to policies trained using teleoperated devices.

Human manipulation showcases unparalleled versatility, ranging from delicate precision tasks to intense, contact-rich interactions and dynamic, high-speed maneuvers—none of which are effectively presented by traditional teleportation systems.

Our framework may fail to complete the task under certain conditions. If the camera moves too quickly, the system can lose track of key visual cues, leading to task failure. In pan flipping, a burger bun might bounce out of the pan when the robot applies excessive upward force.

Our trained pan-flipping policy assists in food preparation, collaborating with humans to flip meat patties or eggs for burgers and sandwiches.

@inproceedings{chen2025tool,

title={Tool-as-Interface: Learning Robot Policies from Observing Human Tool Use},

author={Chen, Haonan and Zhu, Cheng and Liu, Shuijing and Li, Yunzhu and Driggs-Campbell, Katherine Rose},

booktitle={Proceedings of Robotics: Conference on Robot Learning (CoRL)},

year={2025}

}